Abstract

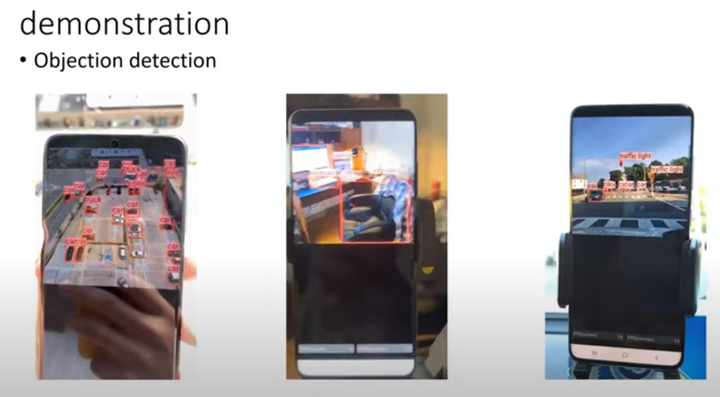

We demonstrate that we are able to achieve real-time inference on mobile devices with model pruning and compiler optimization for various DNN applications including object detection, super resolution, style transfer and segmentation.

Date

Aug 15, 2020 1:00 PM

Location

online meeting